Learn it once more: there was no leak. The knowledge was shared with trade veterans.

It definitely is a treasure map for SEOs. Fixing this puzzle would require our collective perception, essential pondering and evaluation – if that’s even doable.

Why the pessimism?

It’s like when a chef tells you the elements to the scrumptious dish you’ve simply consumed. You hurry to notice all of it down as he turns away.

However whenever you attempt recreating the identical recipe at dwelling, it’s nowhere close to what you skilled on the restaurant.

It’s the identical with the Google doc “leak.” My perspective is our trade was given the forms of elements used to find out the highest search outcomes, however nobody is aware of the way it’s all put collectively.

Not even the brightest two amongst us who had been given entry to the documentation.

If you happen to recall…

In Could, hundreds of inner paperwork, which seem to have come from Google’s inner Content material API Warehouse, claimed to have been leaked (per publication headlines).

In actuality, they had been shared with outstanding trade search engine marketing veterans, together with Rand Fishkin, SparkToro co-founder. Fishkin outright acknowledged in his article that the knowledge was shared with him.

In flip, he shared the documentation with Mike King, proprietor of iPullRank. Collectively and individually, they each reviewed the documentation and supplied their very own respective POVs of their write-ups. Therefore, my tackle all of that is that it’s strategic data sharing.

That truth alone made me query the aim of sharing the interior documentation.

It appears the aim was to present it to search engine marketing consultants so they may analyze it and assist the broader trade perceive what Google makes use of as indicators for rating and assessing content material high quality.

“You don’t pay a plumber to bang on the pipe, you pay them for realizing the place to bang.”

There’s no leak. An nameless supply wished the knowledge to be extra broadly obtainable.

Going again to the restaurant metaphor, we will now see all of the elements, however we don’t know what to make use of, how a lot, when and in what sequence (?!), which leaves us to proceed speculating.

The fact is we shouldn’t know.

Google Search is a product that’s a part of a enterprise owned by its dad or mum firm, Alphabet.

Do you actually assume they’d absolutely disclose documentation in regards to the internal workings of their proprietary algorithms to the world? That’s enterprise suicide.

It is a style.

For established SEOs, the shared Google documentation that’s now public sheds gentle on among the identified rating components, which largely haven’t modified:

- Rating options: 2,596 modules are represented within the API documentation with 14,014 attributes.

- Existence of weighting of the components.

- Hyperlinks matter.

- Profitable clicks matter.

- Model issues (construct, be identified).

- Entities matter.

Right here’s the place issues get attention-grabbing as a result of the existence of some features means Google can increase or demote search outcomes:

- SiteAuthority – Google makes use of it but in addition denied having an internet site authority rating

- King’s article has a piece known as “What are Twiddlers.” Whereas he goes on to say there’s little details about them, they’re primarily re-ranking features or calculations.

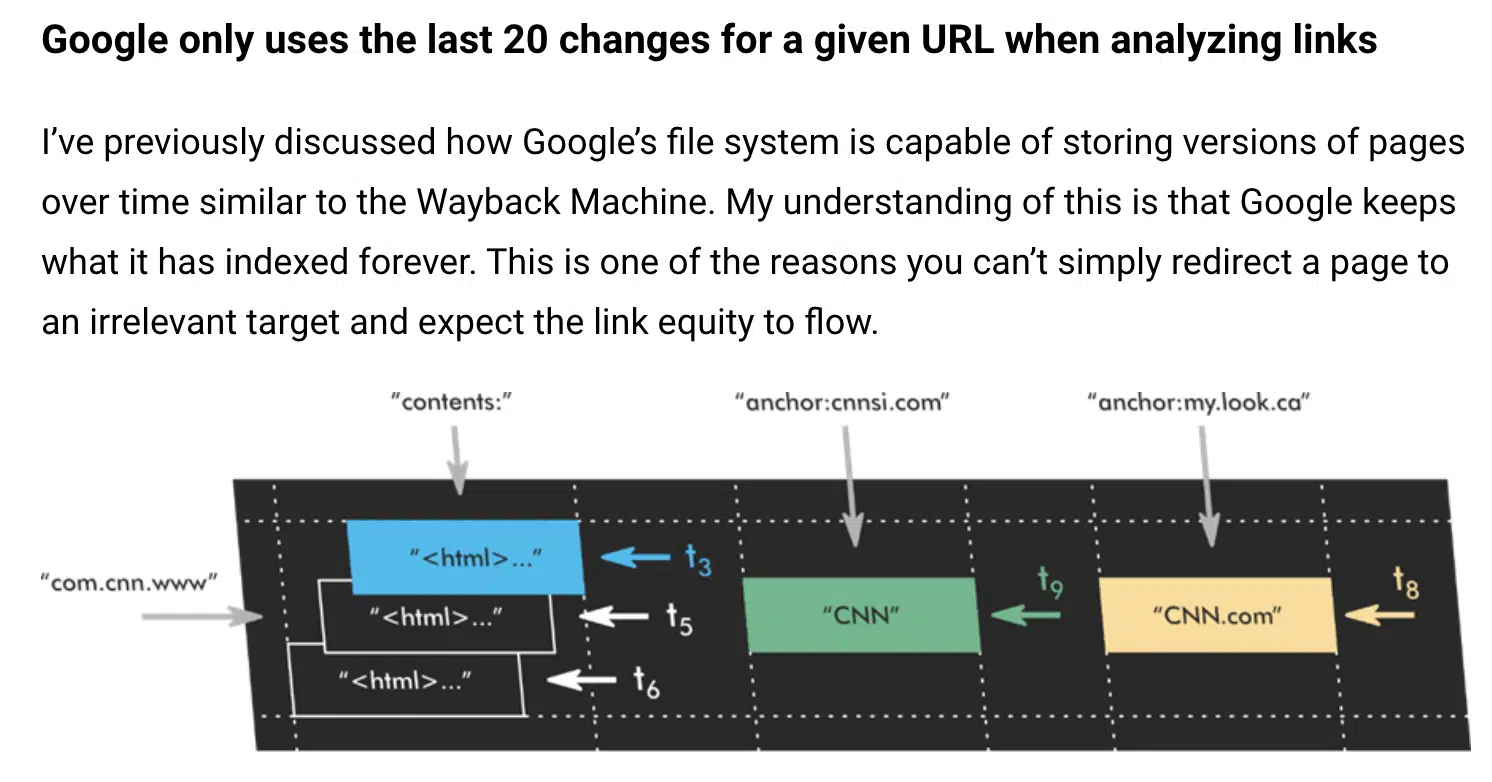

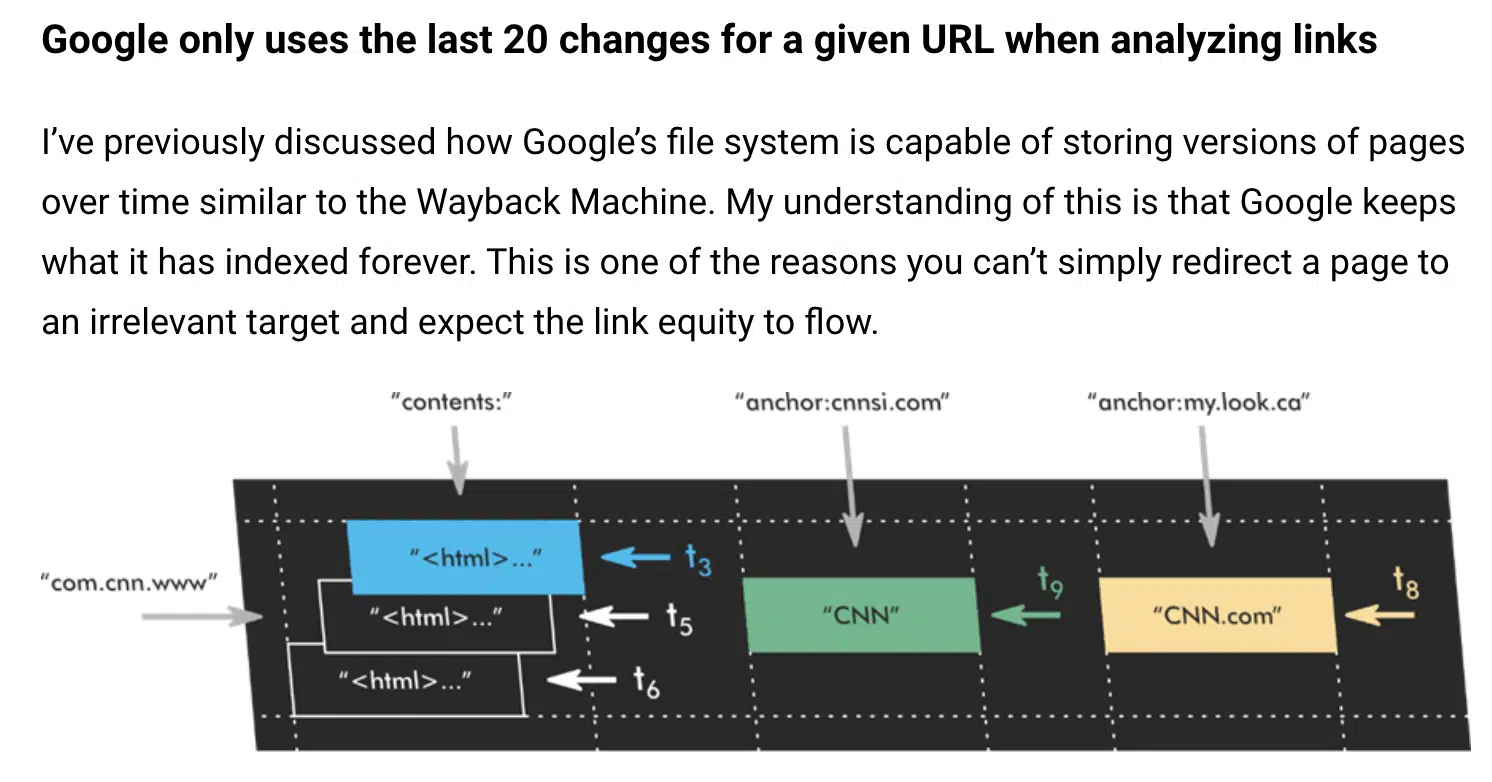

- King’s article “Google solely makes use of the final 20 modifications of a URL when analyzing hyperlinks.” Once more, this sheds some gentle on the concept that Google retains all of the modifications they’ve ever seen for a web page.

Each Fishkin and King’s articles are prolonged, as one would possibly anticipate.

If you happen to’re going to spend time studying by both articles or – tip of the cap to you – the paperwork themselves, could you be guided by this quote by Bruce Lee that impressed me:

“Take in what is beneficial, discard what just isn’t, add what’s uniquely your personal.”

Which is what I’ve achieved beneath.

My recommendation is to bookmark these articles since you’ll wish to hold coming again to learn by them.

Rand Fishkin’s insights

I discovered this half very attention-grabbing:

“After strolling me by a handful of those API modules, the supply defined their motivations (round transparency, holding Google to account, and so on.) and their hope: that I’d publish an article sharing this leak, revealing among the many attention-grabbing items of information it contained and refuting some “lies” Googlers “had been spreading for years.”

The Google API Content material Warehouse exists in GitHub as a repository and listing explaining varied “API attributes and modules to assist familiarize these engaged on a challenge with the info components obtainable.”

It’s a map of what exists, what was as soon as used and is probably at the moment getting used.

Which of them and when is what stays open to hypothesis and interpretation.

Smoking gun

We should always care about one thing like this that’s reliable but speculative as a result of, as Fishkin places it, it’s as near a smoking gun as something since Google’s execs testified in the DOJ trial final yr.

Talking of that testimony, a lot of it’s corroborated and expanded on within the doc leak, as King details in his post. 👀 However who has time to learn by and dissect all that?

Fishkin and King, together with the remainder of the search engine marketing trade, might be mining this set of information for years to return. (Together with local SEO expert Andrew Shotland.)

To begin out, Fishkin focuses on 5 helpful takeaways:

- NavBoost and the usage of clicks, CTR, lengthy vs. brief clicks and consumer knowledge.

- Use of Chrome browser clickstreams to energy Google Search.

- Whitelists in journey, COVID-19 and politics.

- Using high quality rater suggestions.

- Google makes use of click on knowledge to find out the right way to weight hyperlinks in rankings.

Right here’s what I discovered most attention-grabbing:

NavBoost is one in all Google’s strongest rating indicators

Fishkin cites “Click Signals In NavBoost” in his article, which is the place sharing proprietary data is useful, much more of us now know we ought to be doing our homework on the NavBoost system. Thanks, Rand!

In case you weren’t conscious, that’s coming from Google engineer Paul Haahr. One time, we had been in the identical convention room collectively. I really feel like I obtained a tad smarter listening to him.

QRG suggestions could also be instantly concerned in Google’s search system

Seasoned SEOs know the QRG is a superb supply of tangible data for evaluating one’s personal web site towards what Google is asking paid human beings to guage high quality internet outcomes towards. (It’s the OG search engine marketing treasure map.)

What’s essential about what we realized from this documentation is that high quality raters’ suggestions would possibly play a direct function in Google’s search system, not simply function surface-level coaching knowledge.

That is one more reason to rigorously learn and perceive the documentation.

However critically, excessive stage it for me, Rand

Now, for the non-technical of us, Fishkin additionally gives an outline to entrepreneurs in a piece titled “Huge Image Takeaways for Entrepreneurs who Care About Natural Search Site visitors.” It’s nice. It covers issues that resonate, like:

- The significance of “constructing a notable, fashionable, well-recognized model in your area, exterior of Google search.”

Massive, established and trusted manufacturers are what Google likes to ship visitors to, subsequently, in favor of smaller publishers. Who is aware of, perhaps that panorama will shift with the most recent August 2024 core update.

- He mentions that E-E-A-T (expertise, experience, authority, belief) exists, however there aren’t any direct correlations within the documentation.

I don’t assume that makes these features any much less essential as a result of there are a good bit of actionable steps a marketer can take to raised replicate these quality/quantity signals.

Fishkin additionally factors to his analysis on organic traffic distribution and his speculation that for many SMBs and small web site publishers, search engine marketing yields poor returns. “search engine marketing is a giant model, fashionable area’s sport.”

The information doesn’t lie, however the broader context is according to what former in-house enterprise search engine marketing turned advisor Eli Schwartz says: search engine marketing must have a product-market fit as a result of, in my expertise, it’s an consciousness and acquisition channel, not one for demand creation.

Learn Fishkin’s article if you happen to’re a marketer trying to get a fundamental understanding of the shared documentation. King’s article is loads lengthier and extra nuanced for the extra seasoned SEOs.

Mike King’s insights

For starters, I fully agree with King right here:

“My recommendation to future Googlers talking on these subjects: Generally it’s higher to easily say ‘we will’t discuss that.’ Your credibility issues and when leaks like this and testimony just like the DOJ trial come out, it turns into inconceivable to belief your future statements.”

I understand Googlers don’t wish to tip their hand by not saying one thing, however when leaks strategic sharing of data like this floor, folks nonetheless draw their very own conclusions.

It’s no secret that Google makes use of a number of rating components. This documentation pointed to 14,000 rating options and extra, to be precise. King notes this in his article:

King additionally cites a shared setting the place “all of the code is saved in a single place, and any machine on the community will be part of any of Google’s programs.”

Speak Matrix to me, Neo.

Honestly, although, King’s thorough publish might be a kind of forever-open Chrome tabs I’ll at all times have.

I did respect this high-level part titled “Key revelations which will influence the way you do search engine marketing.” That is what these of us skim readers got here for.

King helps SEOs boil the ocean on this part by giving his important takeaways. My private prime takeaways from this part had been this:

“The underside line right here is that it is advisable to drive extra profitable clicks utilizing a broader set of queries and earn extra hyperlink range if you wish to proceed to rank. Conceptually, it is smart as a result of a really robust piece of content material will try this. A give attention to driving extra certified visitors to a greater consumer expertise will ship indicators to Google that your web page deserves to rank.”

Then this:

“Google does explicitly retailer the writer related to a doc as textual content.”

So, whereas E-E-A-T could also be nebulous features of experience and authority to attain, they’re nonetheless accounted for. That’s sufficient proof for me to proceed advising for it and investing in it.

Lastly, this: (throughout the Demotions part)

“Anchor Mismatch – When the hyperlink doesn’t match the goal web site it’s linking to, the hyperlink is demoted on the calculations. As I’ve mentioned earlier than, Google is in search of relevance on either side of a hyperlink.”

Lightbulb second. At the back of my head, I do know the significance of anchor textual content. However it was good to be reminded of the particular method through which relevance is communicated.

Inner and exterior linking can appear to be an innocuous technical search engine marketing facet, however they function a reminder of the care required when utilizing hyperlinks.

See, it’s useful to learn different search engine marketing veterans’ evaluations since you simply would possibly study one thing new.

My prime 5 takeaways for SEOs from the sharing of inner Google paperwork

You’ve heard from one of the best, now listed below are my suggestions to web sites that wish to profit from sustainable, natural development and income alternatives.

All the time bear in mind, on-line, your two major “clients” of your web site are:

- Search engine bots (i.e., Googlebot).

- People looking for options to their issues, challenges and wishes.

Your web site must be cognizant of each and give attention to sustaining and bettering these components:

1. Discovery

Making certain your web site is crawlable by search engine bots in order that it’s within the on-line index.

2. Decipher

Make sure that serps and people simply perceive what every web page in your web site is about. Use applicable headings and construction, related inner hyperlinks and so on.

Sure, I mentioned every web page as a result of folks can land on any web page of your web site from an internet search. They don’t robotically begin on the homepage.

3. Consumer expertise

UX issues, once more, for bots and other people.

It is a double-edged sword, which means that the web page must load shortly (assume CWV) to be browsed and the general consumer interface is designed to serve the human consumer’s wants, “What’s their intent on that web page?”

A very good UX for a bot usually means the location is technically sound and receives clear indicators.

4. Content material

What are you identified for? These are your key phrases, the knowledge you present, the movies and the demonstrated expertise and experience (E-E-A-T) in your vertical.

5. Cellular-friendly

Let’s face it, Googlebot appears to be like for the cell model of your web site first to crawl. “Mobilegeddon” has been a factor since 2015.

Why it is best to at all times take a look at and study

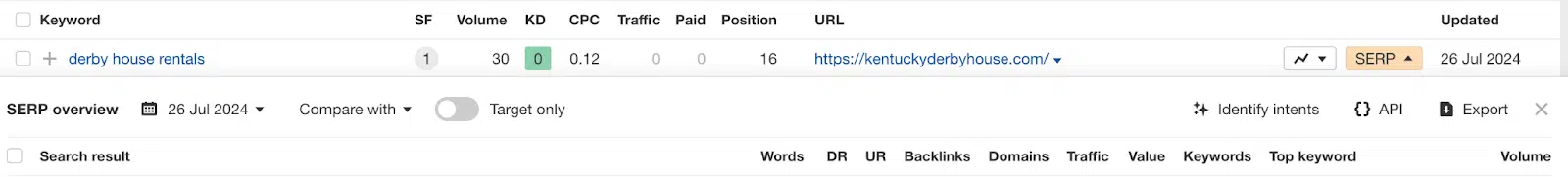

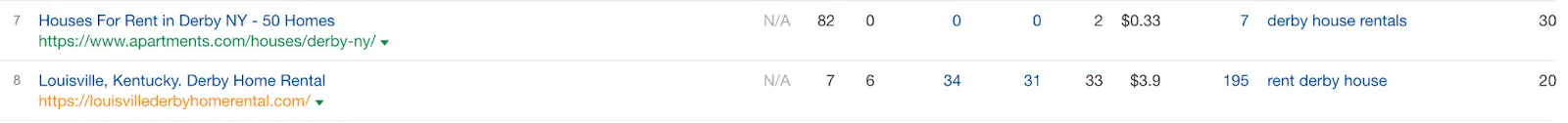

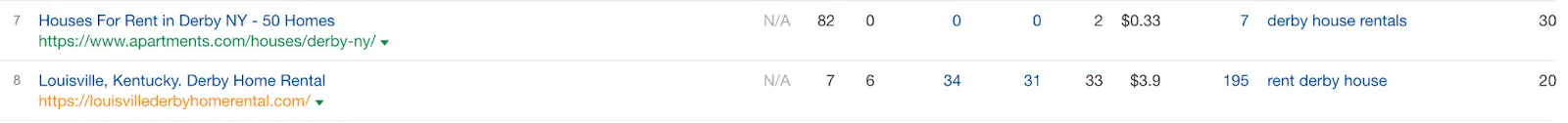

For instance, the rankings of actual match domains proceed to fluctuate continuously.

As somebody with a background in native search engine marketing search directories, I proceed to guage whether or not exact-match domains enhance or lose rankings as a result of Google’s developments on this space curiosity me.

“Actual Match Domains Demotion – In late 2012, Matt Cutts announced that precise match domains wouldn’t get as a lot worth as they did traditionally. There’s a particular function for his or her demotion.”

Personally, in my analysis and statement working with small companies for key phrases with very, very particular and low (10 or much less) search quantity, I haven’t discovered this to be an absolute. I’ll have discovered an outlier. Ha, or an precise leak.

Right here’s what I imply: each Saturday in Could, lots of people will wish to be in Kentucky at Churchill Downs for the quickest two minutes in sports activities, the Kentucky Derby.

The rental houses and properties SERP is dominated by market websites, from Airbnb to Kayak to VRBO and Realtor.com. However there’s one hanging on on this pack. In opposition 8, it’s a precise match area.

Has it been demoted? Perhaps.

It’s additionally, in its personal method, an aggregator web site, itemizing a handful of native rental properties.

So whereas it’s not a reputation model aggregator web site, it has hyper-local content material and, subsequently, could proceed to climate the storm.

It may be a core replace away from being bounced onto web page 2. Nothing to do however experience it till it bucks you.

Heck, the natural itemizing above it’s rating for the wrong location “Derby, NY.”

So, is Google search completely rating all question sorts? Neigh.

NavBoost highlights

For individuals who haven’t been paying shut sufficient consideration. Meet NavBoost.

The documentation talked about NavBoost is a system that employs click-driven measures to spice up, demote, or in any other case reinforce a rating in Internet Search.

Numerous sources have indicated that NavBoost is “already one of Google’s strongest ranking signals.”

The leaked shared documentation specifies “Navboost” by title 84 instances, with 5 modules that includes Navboost within the title. That’s promising.

There’s additionally proof that they ponder its scoring on the subdomain, root area and URL stage, which inherently signifies they deal with totally different ranges of a web site otherwise.

It’s price persevering with to analysis and course of Google’s patent and use of NavBoost.

Conclusion

It’s a present to proceed to have nice minds within the search engine marketing area like Fishkin and King, who can distill massive quantities of documentation into actionable nuggets for us mortals.

None of us understand how the info is used or weighted. However we now know a bit extra about what’s collected and that it exists as a part of the info set used for analysis.

In my skilled opinion, I’ve at all times taken statements from Google with a grain of salt as a result of, in a method, I’m additionally intimately acquainted with being a model ambassador for a publicly traded firm.

I don’t assume any company consultant is actively making an attempt to be deceptive. Positive, their responses will be cryptic at instances, however they essentially can’t be express about any type of inner working system as a result of it’s core to the enterprise’s success.

One motive why Google’s DOJ testimony is so compelling. However it may be troublesome to grasp. On the very least, Google’s personal Search Central documentation is commonly extra succinct.

The shared inner doc is one of the best we’re going by way of studying what’s really included in Google’s “secret sauce.”

As a result of we’ll by no means absolutely know, my sensible recommendation to training SEOs is to take this extra data we now have and to maintain testing and studying from it.

In spite of everything, Google and all of its algorithms are, in parallel, doing the identical alongside the trail of being higher than they had been the day earlier than.

Contributing authors are invited to create content material for Search Engine Land and are chosen for his or her experience and contribution to the search group. Our contributors work beneath the oversight of the editorial staff and contributions are checked for high quality and relevance to our readers. The opinions they specific are their very own.