The brand new oil isn’t information or consideration. It’s phrases. The differentiator to construct next-gen AI fashions is entry to content material when normalizing for computing energy, storage, and vitality.

However the internet is already getting too small to satiate the starvation for brand new fashions.

Some executives and researchers say the business’s want for high-quality textual content information might outstrip provide inside two years, probably slowing AI’s growth.

Even fine-tuning doesn’t appear to work in addition to merely constructing extra highly effective fashions. A Microsoft analysis case research exhibits that efficient prompts can outperform a fine-tuned mannequin by 27%.

We were wondering if the future will consist of many small, fine-tuned, or a few big, all-encompassing models. It seems to be the latter.

There is no AI strategy without a data strategy.

Hungry for more high-quality content to develop the next generation of large language models (LLMs), model developers start to pay for natural content and revive their efforts to label synthetic data.

For content creators of any kind, this new flow of money could carve the path to a new content monetization model that incentivizes quality and makes the web better.

Image Credit: Lyna ™

Boost your skills with Growth Memo’s weekly expert insights. Subscribe for free!

KYC: AI

If content material is the brand new oil, social networks are oil rigs. Google invested $60 million a 12 months in utilizing Reddit content material to coach its fashions and floor Reddit solutions on the prime of search. Pennies, if you happen to ask me.

YouTube CEO Neal Mohan not too long ago despatched a transparent message to OpenAI and different mannequin builders that coaching on YouTube is a no-go, defending the corporate’s huge oil reserves.

The New York Occasions, which is at the moment operating a lawsuit in opposition to OpenAI, revealed an article stating that OpenAI developed Whisper to coach fashions on YouTube transcripts, and Google makes use of content material from all of its platforms, like Google Docs and Maps evaluations, to coach its AI fashions.

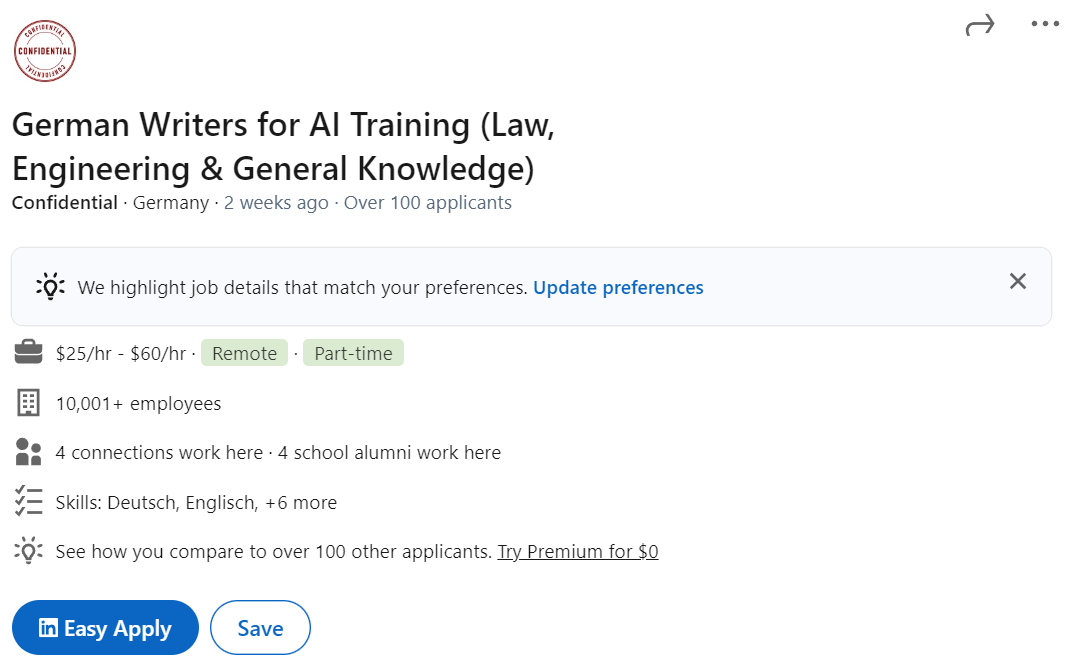

Generative AI information suppliers like Appen or Scale AI are recruiting (human) writers to create content material for LLM mannequin coaching.

Make no mistake, writers aren’t getting wealthy writing for AI.

For $25 to $50 per hour, writers carry out duties like rating AI responses, writing quick tales, and fact-checking.

Candidates will need to have a Ph.D. or grasp’s diploma or are at the moment attending school. Information suppliers are clearly searching for consultants and “good” writers. However the early indicators are promising: Writing for AI might be monetizable.

Picture Credit score: Kevin Indig

Picture Credit score: Kevin IndigMannequin builders search for good content material in each nook of the net, and a few are pleased to promote it.

Content material platforms like Photobucket promote pictures for 5 cents to 1 greenback a chunk. Quick-form movies can get $2 to $4; longer movies price $100 to $300 per hour of footage.

With billions of pictures, the corporate struck oil in its yard. Which CEO can face up to such a temptation, particularly as content material monetization is getting more durable and more durable?

From Free Content:

Publishers are getting squeezed from a number of sides:

- Few are ready for the loss of life of third-party cookies.

- Social networks ship much less site visitors (Meta) or deteriorate in high quality (X).

- Most younger folks get information from TikTok.

- SGE looms on the horizon.

Paradoxically, labeling AI content material higher would possibly assist LLM growth as a result of it’s simpler to separate pure from artificial content material.

In that sense, it’s within the curiosity of LLM builders to label AI content material to allow them to exclude it from coaching or use it the proper means.

Labeling

Drilling for phrases to coach LLMs is only one aspect of creating next-gen AI fashions. The opposite one is labeling. Mannequin builders want labeling to keep away from model collapse, and society wants it as a protect in opposition to fake news.

A brand new motion of AI labeling is rising regardless of OpenAI dropping watermarking because of low accuracy (26%). As an alternative of labeling content material themselves, which appears futile, huge tech (Google, YouTube, Meta, and TikTok) pushes customers to label AI content material with a carrot/stick strategy.

Google makes use of a double-pronged strategy to combat AI spam in search: prominently exhibiting boards like Reddit, the place content material is most certainly created by people, and penalties.

From AIfficiency:

Google is surfacing extra content material from boards within the SERPs is to counter-balance AI content material. Verification is the last word AI watermarking. Although Reddit can’t stop people from utilizing AI to create posts or feedback, chances are high decrease due to two issues Google search doesn’t have: Moderation and Karma.

Sure, Content Goblins have already taken intention at Reddit, however a lot of the 73 million day by day energetic customers present helpful solutions.1 Content material moderators punish spam with bans and even kicks. However probably the most highly effective driver of high quality on Reddit is Karma, “a person’s repute rating that displays their neighborhood contributions.” By easy up or downvotes, customers can achieve authority and trustworthiness, two integral elements in Google’s high quality techniques.

Google not too long ago clarified that it expects retailers to not take away AI metadata from pictures utilizing the IPTC metadata protocol.

When a picture has a tag like compositeSynthetic, Google would possibly label it as “AI-generated” wherever, not simply in buying. The punishment for removing AI metadata is unclear, but I imagine it like a link penalty.

IPTC is the same format Meta uses for Instagram, Facebook, and WhatsApp. Both companies give IPTC metatags to any content coming out from their own LLMs. The more AI tool makers follow the same guidelines to mark and tag AI content, the more reliable detection systems work.

When photorealistic images are created using our Meta AI feature, we do several things to make sure people know AI is involved, including putting visible markers that you can see on the images, and both invisible watermarks and metadata embedded within image files. Using both invisible watermarking and metadata in this way improves both the robustness of these invisible markers and helps other platforms identify them.

The downsides of AI content are small when the content looks like AI. But when AI content looks real, we need labels.

While advertisers try to get away from the AI look, content platforms prefer it because it’s easy to recognize.

For commercial artists and advertisers, generative AI has the power to massively speed up the creative process and deliver personalized ads to customers on a large scale – something of a holy grail in the marketing world. But there’s a catch: Many images AI models generate feature cartoonish smoothness, telltale flaws, or both.

Consumers are already turning against “the AI look,” so much so that an uncanny and cinematic Super Bowl ad for Christian charity He Gets Us was accused of being born from AI –even though a photographer created its images.

YouTube started enforcing new guidelines for video creators that say realistic-looking AI content needs to be labeled.

Challenges posed by generative AI have been an ongoing area of focus for YouTube, but we know AI introduces new risks that bad actors may try to exploit during an election. AI can be used to generate content that has the potential to mislead viewers – particularly if they’re unaware that the video has been altered or is synthetically created. To better address this concern and inform viewers when the content they’re watching is altered or synthetic, we’ll start to introduce the following updates:

- Creator Disclosure: Creators will be required to disclose when they’ve created altered or synthetic content that’s realistic, including using AI tools. This will include election content.

- Labeling: We’ll label realistic altered or synthetic election content that doesn’t violate our policies, to clearly indicate for viewers that some of the content was altered or synthetic. For elections, this label will be displayed in both the video player and the video description, and will surface regardless of the creator, political viewpoints, or language.

The biggest imminent fear is fake AI content that could influence the 2024 U.S. presidential election.

No platform wants to be the Facebook of 2016, which saw lasting reputational damage that impacted its stock price.

Chinese and Russian state actors have already experimented with fake AI news and tried to meddle with the Taiwanese and coming U.S. elections.

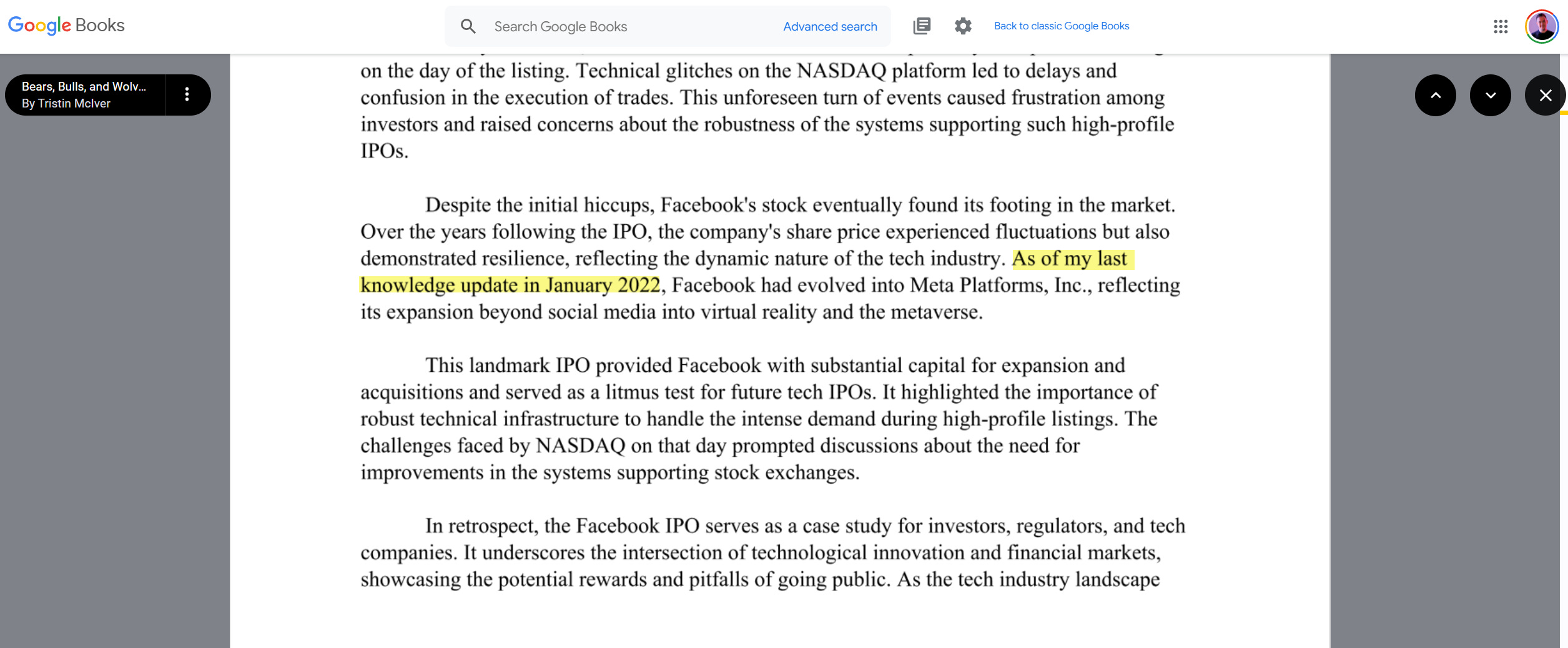

Now that OpenAI is close to releasing Sora, which creates hyperrealistic videos from prompts, it’s not a far jump to imagine how AI videos can cause problems without strict labeling. The situation is tough to get under control. Google Books already features books that were clearly written with or by ChatGPT.

Picture Credit score: Kevin Indig

Picture Credit score: Kevin IndigTakeaway

Labels, whether or not psychological or visible, affect our selections. They annotate the world for us and have the ability to create or destroy belief. Like class heuristics in buying, labels simplify our decision-making and data filtering.

From Messy Middle:

Lastly, the thought of class heuristics, numbers clients deal with to simplify decision-making, like megapixels for cameras, affords a path to specify person habits optimization. An ecommerce retailer promoting cameras, for instance, ought to optimize their product playing cards to prioritize class heuristics visually. Granted, you first want to realize an understanding of the heuristics in your classes, they usually would possibly differ primarily based on the product you promote. I suppose that’s what it takes to achieve success in search engine optimisation nowadays.

Quickly, labels will inform us when content material is written by AI or not. In a public survey of 23,000 respondents, Meta discovered that 82% of individuals need labels on AI content material. Whether common standards and punishments work remains to be seen, but the urgency is there.

There is also an opportunity here: Labels could shine a spotlight on human writers and make their content more valuable, depending on how good AI content becomes.

On top, writing for AI could be another way to monetize content. While current hourly rates don’t make anyone rich, model training adds new value to content. Content platforms could find new revenue streams.

Web content has become extremely commercialized, but AI licensing could incentivize writers to create good content again and untie themselves from affiliate or advertising income.

Sometimes, the contrast makes value visible. Maybe AI can make the web better after all.

For Data-Guzzling AI Companies, the Internet Is Too Small

Inside Big Tech’s Underground Race To Buy AI Training Data

OpenAI Gives Up On Detection Tool For AI-Generated Text

Labeling AI-Generated Images on Facebook, Instagram and Threads

How The Ad Industry Is Making AI Images Look Less Like AI

How We’re Helping Creators Disclose Altered Or Synthetic Content

Addressing AI-Generated Election Misinformation

China Is Targeting U.S. Voters And Taiwan With AI-Powered Disinformation

Google Books Is Indexing AI-Generated Garbage

Our Approach To Labeling AI-Generated Content And Manipulated Media

Featured Picture: Paulo Bobita/Search Engine Journal